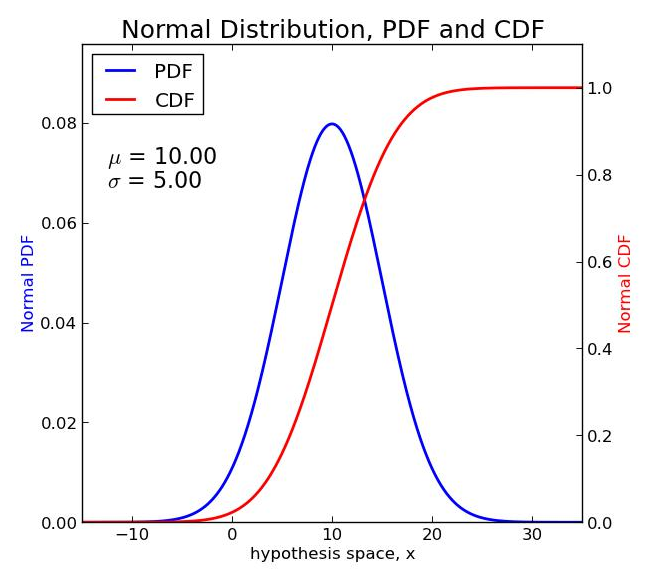

Univariate Gaussian distribution

The pdf of a Gaussian Distribution

where is the mean or center of mass and is the variance.

Covariance, correlation and mutivariate Gaussians

Covariance

The covariance between two rv’s and measures the degree to which and are related. Covariance is defined as

Expectiation

왜 가 저렇게 estimate 될 수 있을까? 먼저 우리는 저 적분을 각각의 에 해당하는 값을 쌓은 결과의 합으로 예측하자. 그러면 를 histogram estimator로 생각해 줄 수 있다. 이를 위해 우리는 먼저 function 를 정의해준다.

where is the total number of points and each are the frequency point.

일반적으로 는 Dirac Delta Function으로 불린다.

There are three main properties of the Dirac Delta Function.

From the properties of the function, with and :

covariance matrix

If is a -dimensional random vector:

If ,

Bivariate Gaussian distribution example

Assume we have two independent univariate Gaussian variables

We define first:

Their joint distribution is:

Likelihood

Let’s assume that we have data points , which are independent and Gaussian with unknow mean and variance 1:

with likelihood .

Finding the that maximizes the likelihood is equivalent to moving the Gaussian until the likelihood is maximized.

The likelihood for linear regression

Let us assume that each label is Gaussian distributed with mean and variance , which in short we write as:

we can get “probability” of “data” for given “parameters” with constant value and loss function:

지난번에 사용한 식을 가져와보자.

우리는 각각의 점에 대해서 하나의 Gaussian 을 놓을 것이다. 각각의 Gaussian의 mean은 가 될 것이다. 각각의 확률은 각각의 점에서의 Gaussian function 까지의 길이일 것이다. 이렇게 변환시킨다면 문제는 OR

Maximum likelihood

The maximum likelihood estimate(MLE) of is obtained by taking the derivative of the log-likelihood, .

이는 likelihood를 더 컴퓨팅 하기 좋도록 바꿔준다.

The goal is to maximize the likelihood of seeing the training data by modifying the parameters

The goal is to maximize or minimize .

The Maximum likelihood(ML) estimate of is:

The Maximum likelihood(ML) estimate of is:

Making prediction

Given the training data , for a new input and known is given by:

Entropy

Bernoulli

A Bernoulli random variable r.v. takes values in

Where . We can write this probability more succinctly as follows:

Entropy

In information theory, entropy is a measure of the uncertainty associated with a random variable. It is defined as:

Example : For a Bernoulli variable X; the entropy is:

Entropy of a Gaussian in D dimensions

MLE - properties

For independent and identically distributed (i.i.d.) data from ( is true parameter or parameter of god or parameter generated by nature),the MLE minimizes the Kullback-Leibler divergence:

이 글은 Oxford machine learning을 듣고 요약한 글 입니다.